The privacy paradox: how Europe's new AI guidelines reshape data protection

- Erwin SOTIRI

- Dec 22, 2024

- 4 min read

The European Data Protection Board (EDPB) has issued a landmark Opinion 28/2024 addressing crucial data protection aspects related to AI models. This opinion provides essential guidance on several key areas that impact both developers and users of AI systems.

The significance of this opinion cannot be overstated. It marks the first comprehensive regulatory framework addressing the unique privacy challenges posed by AI models. Prior to this guidance, organisations operated in a regulatory grey area, attempting to apply traditional data protection principles to increasingly sophisticated AI systems. The opinion brings clarity to this complexity, offering concrete guidance on crucial aspects such as anonymisation standards, legitimate interest assessments, and the distinction between development and deployment phases.

From now on, every organisation developing or deploying AI systems within the European Union's jurisdiction must reassess their practices. The opinion's reach extends beyond pure AI companies to encompass any entity using AI-powered tools for data processing. From financial institutions using AI for fraud detection to healthcare providers implementing diagnostic systems, the implications ripple across all sectors.

The timing of this opinion proves particularly crucial. As AI capabilities advance at an unprecedented pace, the need for clear regulatory guidance becomes increasingly urgent. The EDPB's intervention provides a framework that balances innovation with privacy protection, offering organisations a roadmap for responsible AI development while safeguarding individual rights.

Furthermore, this opinion sets a global precedent. Given the EU's influential role in data protection regulation, exemplified by the GDPR's worldwide impact, this guidance likely shapes international standards for AI privacy compliance. Organisations worldwide, regardless of their geographic location, would be prudent to align their practices with these guidelines, particularly if they serve European customers or process European residents' data.

The opinion's approach to practical implementation deserves particular attention. Rather than imposing rigid rules that might stifle innovation, it establishes principle-based requirements that adapt to technological evolution. This flexibility ensures the guidance remains relevant as AI technology advances while providing clear compliance benchmarks for organisations.

The following article will succinctly outline the key points from the EDPB's opinion.

Personal data and anonymity

The EDPB’s opinion establishes that AI models trained with personal data cannot automatically be considered anonymous.

For an AI model to be deemed anonymous, two critical conditions must be met:

The likelihood of direct extraction of personal data used in development must be insignificant

The probability of obtaining personal data from queries must be negligible

Legitimate interest assessment

The EDPB's opinion introduces substantial practical obligations that organisations must address to ensure compliance. These requirements fundamentally reshape how organisations approach AI development and deployment.

Implementing anonymisation measures

Organisations must maintain detailed records of their anonymisation protocols, including:

Technical documentation: Each anonymisation technique requires thorough documentation of its implementation, effectiveness testing, and ongoing monitoring. This includes recording the mathematical models used, the statistical analyses performed, and the technical safeguards implemented.

Validation protocols: Organisations must document their validation methodologies, demonstrating how they verify the effectiveness of anonymisation measures. This comprises both initial validation and periodic reassessment procedures.

Legitimate interest documentation

The documentation of legitimate interests extends beyond mere record-keeping.

Interest analysis framework: Organisations must maintain comprehensive records demonstrating how their legitimate interests align with legal requirements. This includes documenting:

The specific business needs driving data processing

The relationship between processing activities and stated objectives

Evidence supporting the necessity of chosen processing methods

Balancing test evidence: Documentation must include detailed analyses of how organisations balance their interests against individual rights, including:

Assessment criteria used

Stakeholder consultation processes

Mitigation measures implemented

Risk assessment: A dynamic approach

The opinion mandates a sophisticated, multi-layered risk assessment strategy that requires continuous attention and adaptation.

Identification risk evaluation

Organisations must establish systematic approaches to:

Track potential re-identification risks

Monitor emerging attack vectors

Assess the effectiveness of existing safeguards

This includes regular evaluation of:

New de-anonymisation techniques

Advancing computational capabilities

Emerging privacy-enhancing technologies

Fundamental rights impact

This assessment requires:

Detailed analysis of potential impacts on individual privacy rights

Consideration of collective impacts on specific groups or communities

Evaluation of broader societal implications

Technological evolution monitoring

Organisations must establish:

Systems for tracking relevant technological developments

Processes for assessing the impact of new technologies on existing protection measures

Procedures for implementing necessary updates or modifications

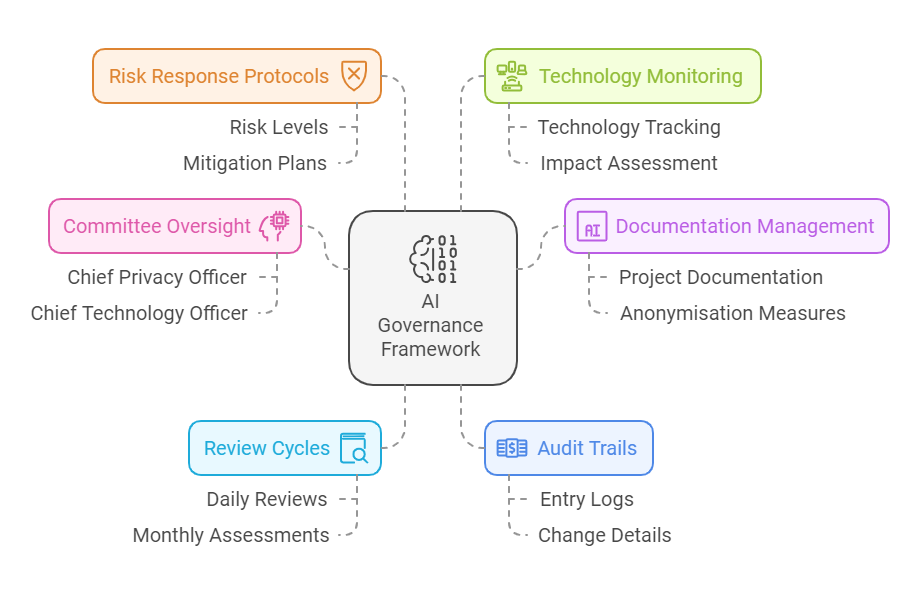

Practical implementation strategy

To effectively meet these requirements, organisations should:

Establish clear governance structures

Develop comprehensive documentation templates

Implement regular review cycles

Maintain audit trails

Create response protocols for identified risks

Development vs. deployment phases

The EDPB Opinion makes a crucial distinction between the development and deployment phases of AI models because each phase involves different data protection considerations and legal implications. The EDPB emphasises that development and deployment may constitute separate processing activities with distinct purposes, requiring individual assessment of:

· Legal basis for processing

· Data protection compliance

· Controller responsibilities

Development phase

The development phase encompasses all activities before the AI model goes into operation, including:

· Code development

· Training data collection

· Pre-processing of personal data

· Model training and validation

Deployment phase

The deployment phase covers all operational aspects after development:

· The model's operational application

· Processing activities that are currently underway

· Assimilation into more extensive artificial intelligence systems

Impact of unlawful processing

The EDPB outlines three scenarios regarding unlawful processing:

Scenario 1: When personal data remains in the model and is processed by the same controller

· Case-by-case assessment required

· Evaluation of separate processing purposes

Scenario 2: When personal data remains in the model but is processed by a different controller

· New controller must conduct appropriate assessment

· Consider source of personal data

· Evaluate previous infringement findings

Scenario 3: When the model is anonymized after unlawful processing

· If truly anonymous, GDPR would not apply to subsequent operations

· Lawfulness of deployment phase not impacted by initial unlawful processing

Practical implications

The consequences are twofold: documentation and risk assessment

Documentation requirements

Controllers must maintain comprehensive documentation demonstrating:

· Anonymization measures implemented

· Legitimate interest assessments

· Compliance with data protection principles

Risk assessment

The opinion emphasises the need for:

· Regular evaluation of identification risks

· Assessment of potential impacts on fundamental rights

· Consideration of technological evolution

Future considerations

The EDPB acknowledges that AI technologies are rapidly evolving and that their guidance should be interpreted in light of technological advancement.

This flexible approach ensures the opinion remains relevant while providing clear principles for compliance. This opinion represents a significant step in clarifying how data protection principles apply to AI models, offering practical guidance while maintaining the necessary flexibility to address future technological developments